Cardiovascular Health Scan

Multi-platform vital signs and cardiovascular health risks using facial blood flow analysis.

Project Information

This covers my work on the pre-existing single scan and a clinical guideline-based split-capture scan. I will cover some guidelines, how it works, and the challenges that presented themselves.

Company

Advanced Health Intelligence

Cardiovascular Health Scan (CHS), also known as FaceScan, is a third-party technology that was licensed from Nuralogix/Anura. It operates as a cloud service that processes key facial features captured using the front camera. Every 5 seconds, a payload is sent to a cloud API called Deepaffex for processing. After 30 seconds, the service returns a payload of measurements (or fails).

The default UI for CHS is created by Anura and includes basic theming options. There is the option to create a custom UI, but this was never utilized. Initially available for iOS and Android platforms, CHS was later expanded to include support for web browser-capable platforms.

All features made available by Anura were sanitized, repackaged, themed, and offered to AHI partners through our own MultiScanSDK.

Slightly older Anura scan themed inside the AHI Demo app. This was current in AHI at the time as the new version (right) needed vetting.

An updated version, on the Anura app with a lot my recommendations ;-)

It’s important to note that limitations exist for the technology by design. For instance, a face scan cannot provide accurate measurements for body composition or breathing rate due to the limited data and specific area where data is being captured (the human face).

Consequently, many of the measurements offered by Nuralogix (such as anxiety, facial age, T2D, blood glucose, cholesterol, etc.) have limited usefulness in clinical settings due to being inaccurate and not useful as indicators in predicting someone’s health.

Markets

Cardiovascular Health Scan has its biggest impact in the healthcare and insurance markets. However, it was also originally pushed to fitness and corporate wellness. Some data points like the Anxiety Index appeared in pitch decks, but in reality, the data point was weak by design. Luckily, those markets were dropped, along with that data point.

Biometric Data

Our primary interest is in blood pressure and, to some extent, the risk predictions for cardiovascular disease, heart attack, and stroke.

However, it’s essential to recognize that measuring blood pressure in a clinical setting and using recommended guidelines differs significantly from what is offered out of the box by Anura.

To accurately measure blood pressure, it is important to follow established guidelines that take into account an individual's physical condition.

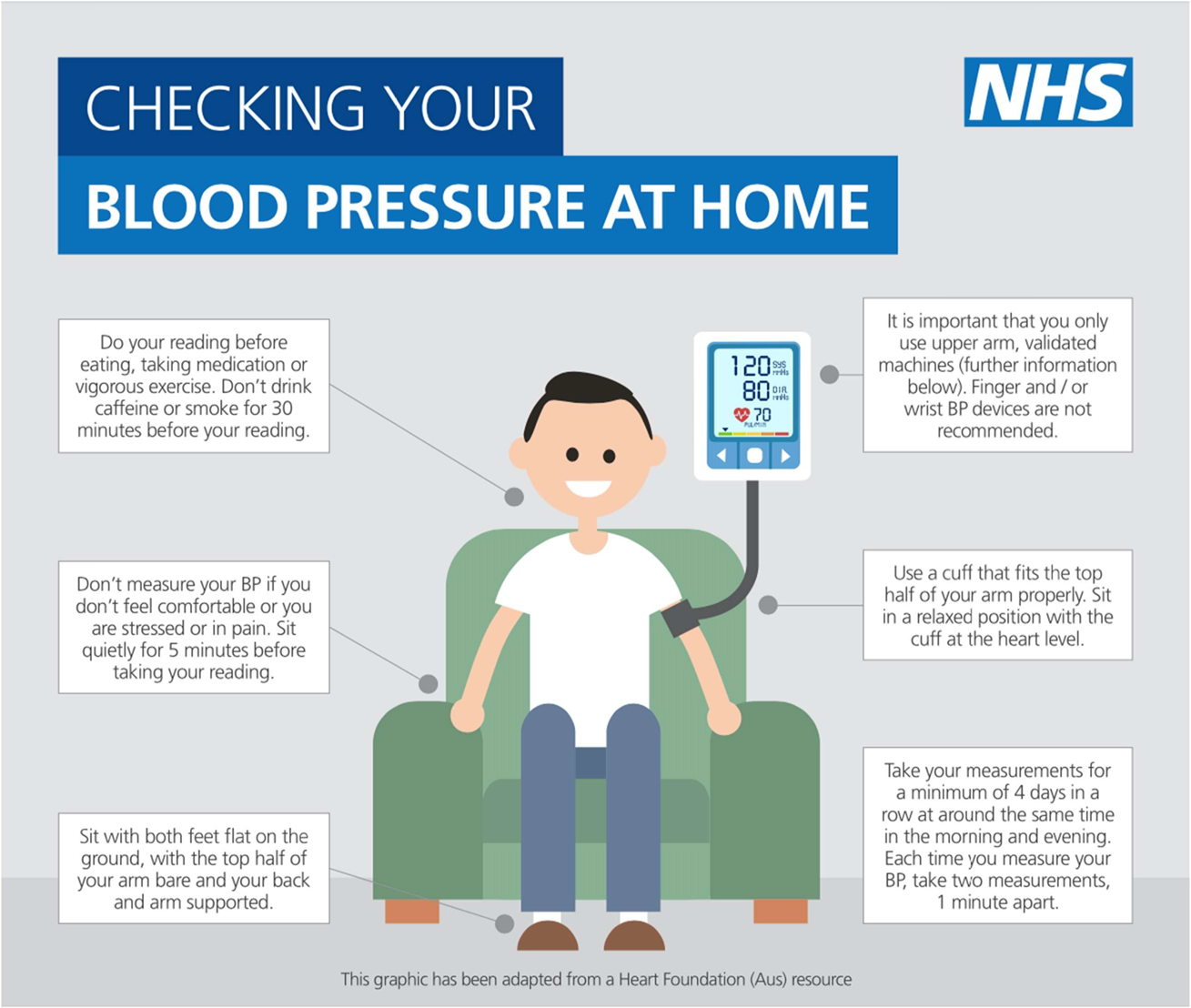

These are the standard guidelines for home measurement that were established:

⁍ Measure twice a day (in the morning and at night) for four to seven days, discarding the first reading.

⁍ For people with stable blood pressure, measure every 4 to 6 months.

⁍ Each measurement requires three scans, taken one minute apart.

⁍ Before the first scan, rest for five minutes.

⁍ Do not eat or drink anything, including caffeine, alcohol, or exercise for at least 30 minutes.

⁍ Sit upright with feet flat on the floor, avoid crossing your legs, and ensure that your back is straight and supported.

⁍ During the measurement, remain silent.

When measuring blood pressure at home, it is necessary to make adjustments to account for over- or under-estimation.

It's important to keep in mind that there is a real-world following for some or all of these steps when patients undergo home monitoring. If a family member or friend has had any heart-related issues and undergone surgery, performing regular blood pressure readings at home is required.

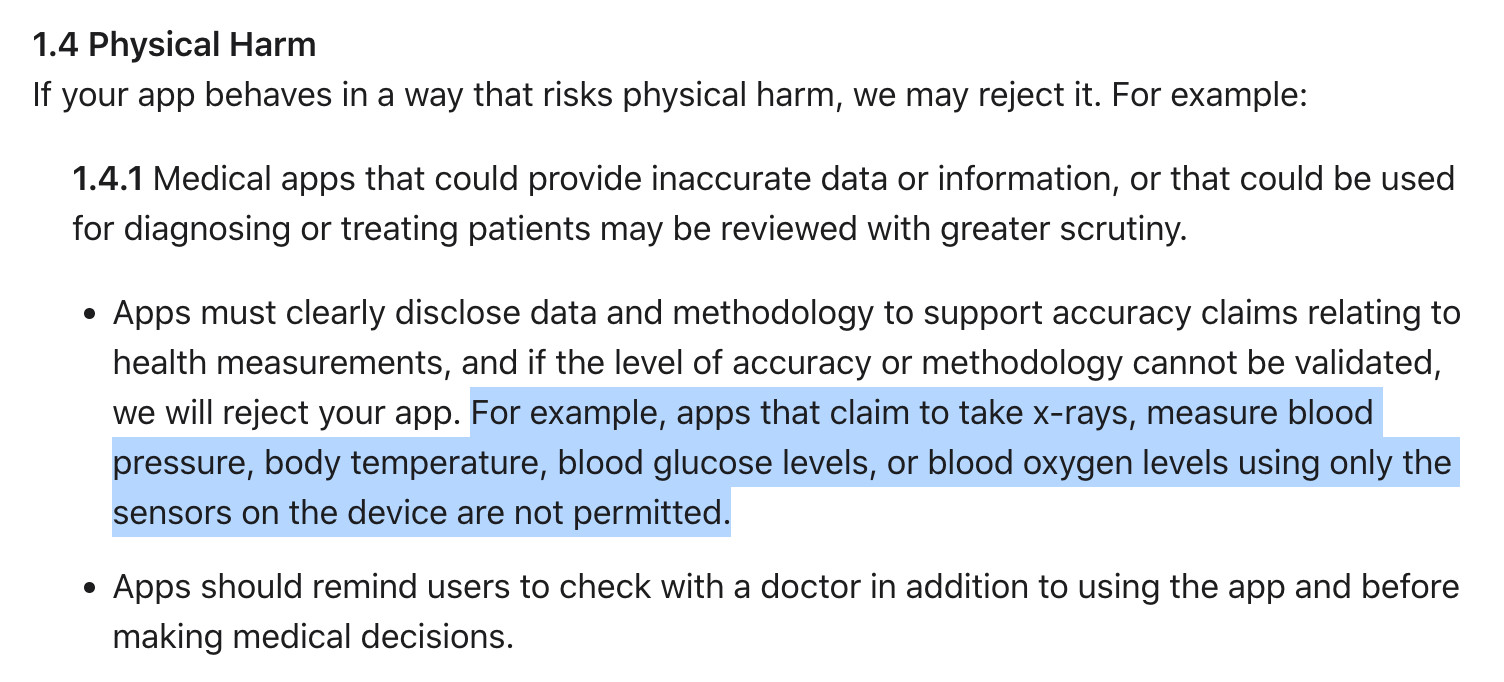

Google and Apple have strict guidelines around the display of medical data, and the use of the phone as a medical device. Although apps exist on some of the app stores, they risk being removed, and any new apps going for approval will likely not be approved. As the technology is embedded into partner apps via SDK, it is a high-risk scenario that their app will get removed or rejected. Conforming to strict guidelines is a continuous process that requires a dedicated approval process and timeline.

Rather than displaying systolic and diastolic readings and using the term 'high blood pressure,' I chose to present the interpreted risk of hypertension (and hypotension). This approach may not be foolproof in the long term but it remains effective in the approval process.

Apple App Store Guidelines

Validating blood pressure is a complex process. Using a sphygmomanometer, a qualified clinician will measure the systolic pressure, while another will measure the diastolic pressure. In addition to these measurements, a third measurement is taken using an automatic BP monitor cuff. The phone camera captures facial data and predicts blood pressure for comparison. You can refer to the Anura study for more information if interested.

The automatic blood pressure cuff suffers from inaccuracies. Most automated home blood pressure monitors are accurate within about 5-15 mmHg compared to gold-standard sphygmomanometers. This is why you need to measure 3 times in a clinical setting, and using an approved device in your region.

This is for academic acceptance approval by government or industry bodies (like the FDA). In the real world, that 5-15mg might not make any difference, because the standard guidelines of measurement compensate for the inaccuracies.

A Sphygmomanometer

Most people are surprised at how basic (but precise) some medical instruments are, like a stethoscope.

Fluctuating physical conditions (like breathing) mean you will likely see changing values being returned when you measure multiple times. If a home scale gave you a different weight each time, we would consider it faulty, but in the world of blood pressure and measurements, in general, using any sensor-based device, it is down to signal processing and fluctuations and variations in the human body.

Multiplatform Fragmentation: Web-based FaceScan

The web-based capture offered by Anura did not meet the anticipated standards in terms of user experience and UI. When conducting a face scan with a handheld device and with a web browser, there was a significant disparity in the visual interface and experience.

Despite its inclusion as a product offering by AHI, the product was deficient in refinement and lacked the necessary strategic leadership guidance to ensure its success. This posed a considerable challenge when integrating with partners and had to be used in a limited capacity.

However, a notable advantage of the web-based scan is its immunity to app store guideline restrictions. This allows for the unrestricted display of blood pressure and all other readings.

(Video) Web FaceScan Demo

Various UX issues such as camera selection, obstructive UI, low quality bitmaps, low quality presented video, poor use of screen real estate.

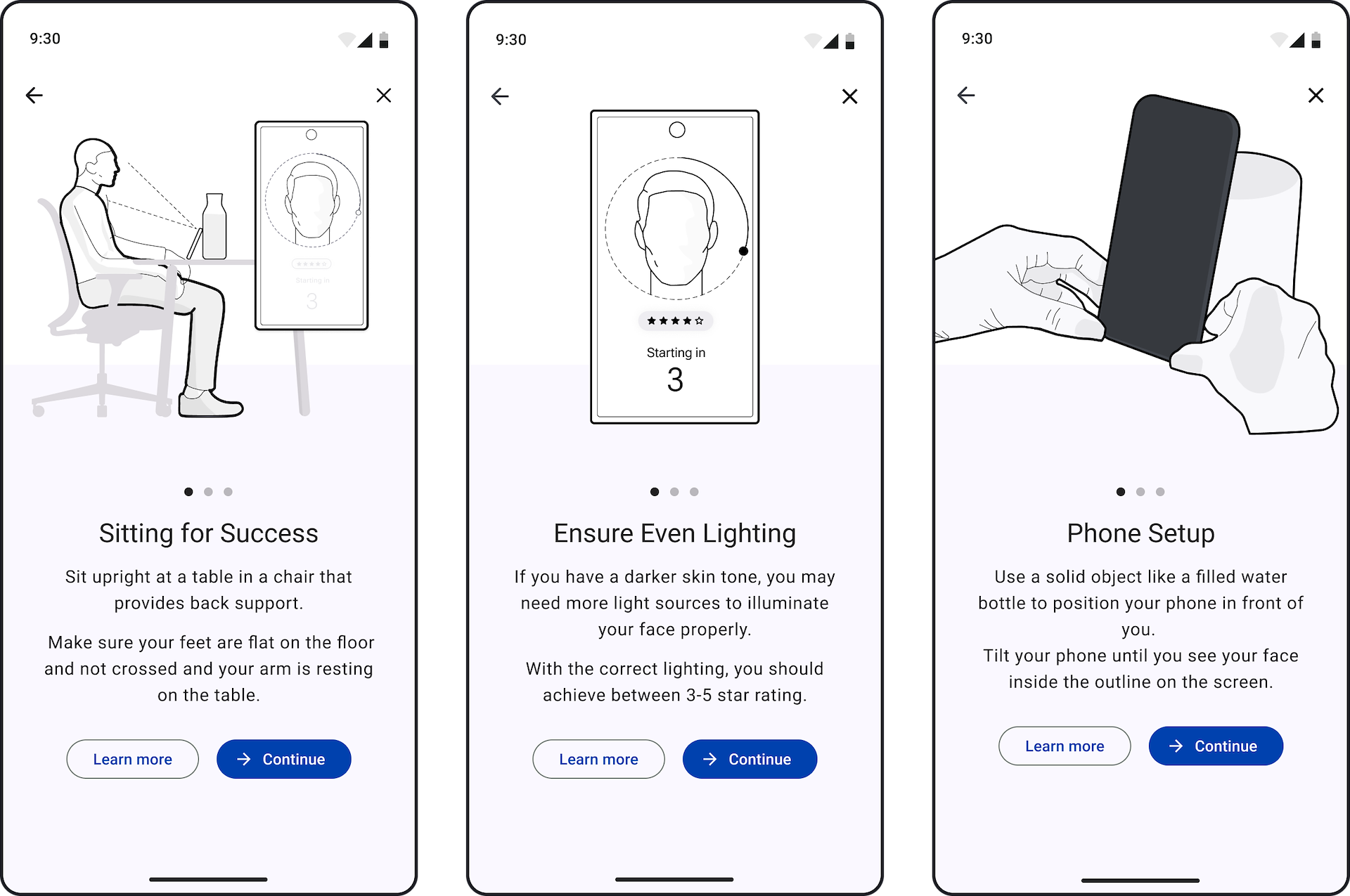

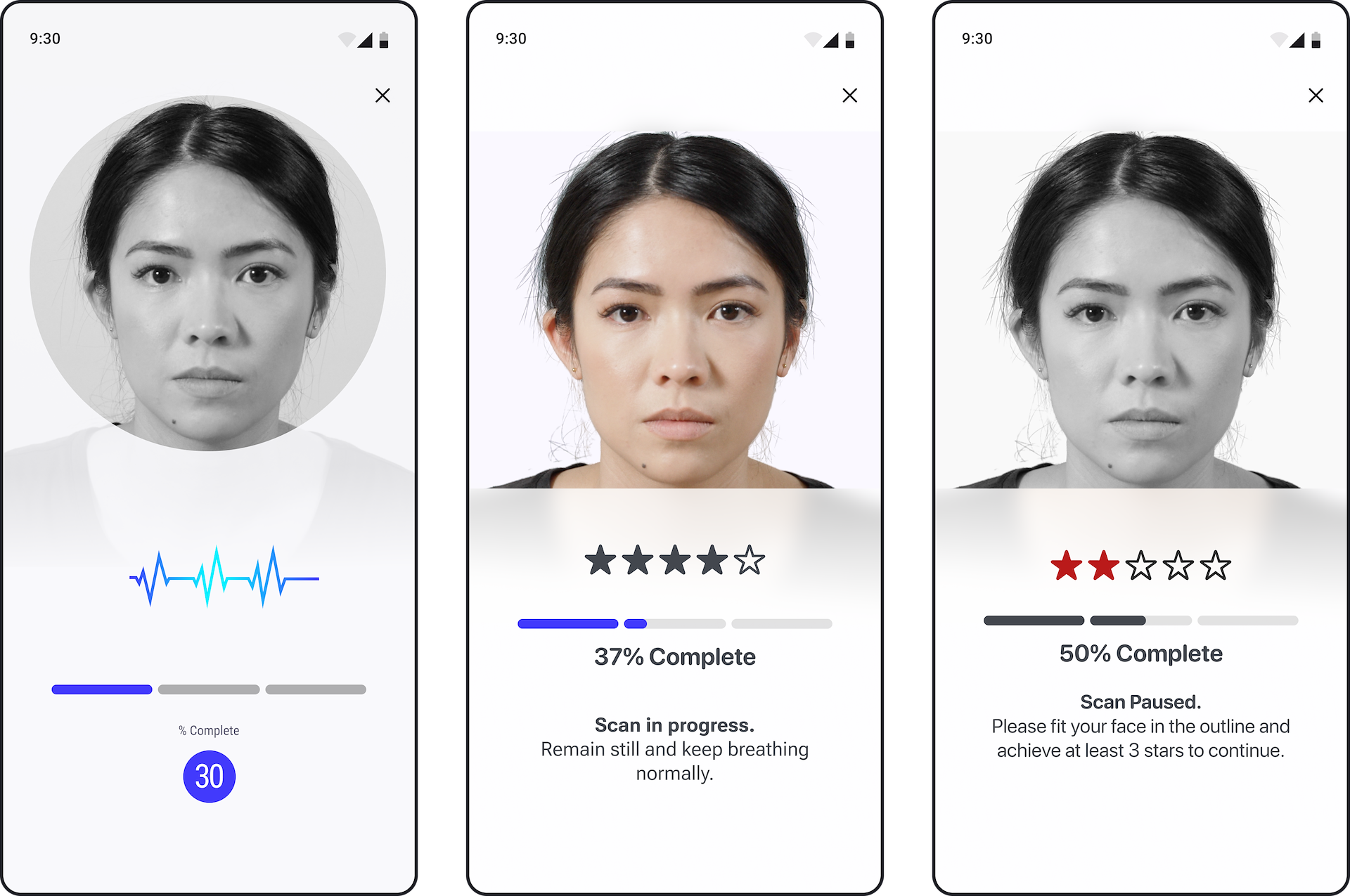

Lighting & Skin Tone

Photography, like all visual arts, presents certain challenges, one of which is lighting. The initial user interface of Anura utilized a star rating system, ranging from 1 to 5, to indicate the uniformity of facial illumination. Factors such as overexposure from a lamp or partial illumination due to sunlight or directional lighting, such as LED kitchen lights, could result in a lower star rating.

The system’s complexity increases when considering the Fitzpatrick scale and the light-blocking properties of melanin. Individuals with darker skin tones (4-5 on the Fitzpatrick scale) require more light to penetrate the skin surface to detect blood flow and other features. Consequently, they were advised to use additional lighting to illuminate their faces. However, this often resulted in overexposure in areas such as the nose, forehead, or cheeks, leading to a decrease in the star rating. This discrepancy resulted in a high signal-to-noise ratio (SNR) and a low UI rating.

If people followed the star rating and achieved even lighting, the SNR would decrease, resulting in a failed scan.

Facial Feature Obstruction

For a successful scan, it is essential to have a robust signal-to-noise ratio (SNR), which requires multiple facial points to provide sufficient variation. However, certain factors such as tattoos, facial hair, failure to remove masks, or significant scarring could render the scan unfeasible.

Over time, these challenges can be addressed, but it necessitates the implementation of a support system or built-in detection mechanism to identify the reasons for scan failure. Analogous to Body Analysis, which requires confidence scoring, facial scanning also needs a confidence score along with the identification of failure points.

Currently, a user may experience a failed scan without any clear indication of the cause, as there is no AI detection for tattoos or scars. This detection must be performed on the device itself and not in the cloud, to ensure that no personally identifiable information (PII) leaves the device.

No UX Test Data

Partner companies are not obligated to share User Experience (UX) Test data. Nuralogix is no exception. Typically, companies perform due diligence by examining validation studies, which confirm or refute the accuracy and repeatability of data, with a particular focus on the specificity and sensitivity of the data. However, these studies do not necessarily reflect real-world usability. Emerging technologies often receive leniency in this regard, and this case was no exception.

Furthermore, we were dealing with third-party technology, over which we had no control in terms of timelines or prioritization of issues. This required us to conduct UX Testing on another company's technology, provide them with the report free of charge, and then hope for the necessary fixes.

I submitted feedback and a solution to the Nuralogix team regarding skin tone issues, which was implemented three years later. The solution involved using the phone's background to illuminate the face by turning it white. Upon face detection, the screen brightness was set to 100%.

This update necessitated that the face be approximately 20 cm from the device, which altered the physical posture required for a capture and necessitated an update to the scan guide.

Cardiovascular Scan Guide

These would eventually need to be updated to match new scan conditions for face illumination which meant the device is held closer to the person’s face.

These are placeholder illustrations.

Part of the complexity of a scan session is how to deal with failure events, such as:

⁍ Loss of SnR quality through lighting or environmental changes

⁍ Interruptions leading to timeout or no face detection

⁍ Single vs Multiple failures

⁍ Device movement or displacement leading to resetting its position

⁍ Inactivity through no face detection

⁍ Network errors

Additionally, there are the usual challenges that come with performing the scan itself, such as lighting and facial feature obstruction. There is no way to know if the rest period was followed or if it’s possible to keep them focused for a long period of time outside of engagement periods to meet timing and frequency clinical guidelines.

To make matters more complicated, a scan will fail due to a poor SnR after 15 seconds. If the cause is environmental or obstruction-related, restarting the scan will likely lead to another failure.

What if they are on the final scan? What if a person completed two scans but had multiple failures on the last scan? Why did the scan fail? Was it SnR-related, or did their face move out of the outline?

At its core, a person has a time limit to perform a minimum of 3 scans. I would need to calculate how much time someone would need to commit to conducting an assessment. A natural path where a person performs correctly, without error, will complete a session in a minimum of 8 minutes.

5 min rest + 30s + 1m wait + 30s + 1m wait + 30s.

How would this be impacted by one or more failure events? How many failures could occur before the assessment time elapsed, and they would need to restart? If I fail at 15 seconds, what is the impact? What if the scan fails at 29 seconds?

If they fail at 29 seconds, then you’re going to restart the scan again, with some padding plus a pre-countdown of about 5 seconds.

Finding the min/max of failure is worthwhile. I worked through some napkin math for certain scenarios. These would come in handy when comparing some alternate scan methods.

In-app messaging before the assessment can set expectations and drive outcomes. Stating that they will need “about 10 minutes of uninterrupted time” will lead to better outcomes.

By far, the biggest challenge to overcome is time.

Countdowns exist in other scans as pre-countdowns and timers for Health at Rest and Fitness Evaluation. You can read more about those in the Biometric Health Assessment project.

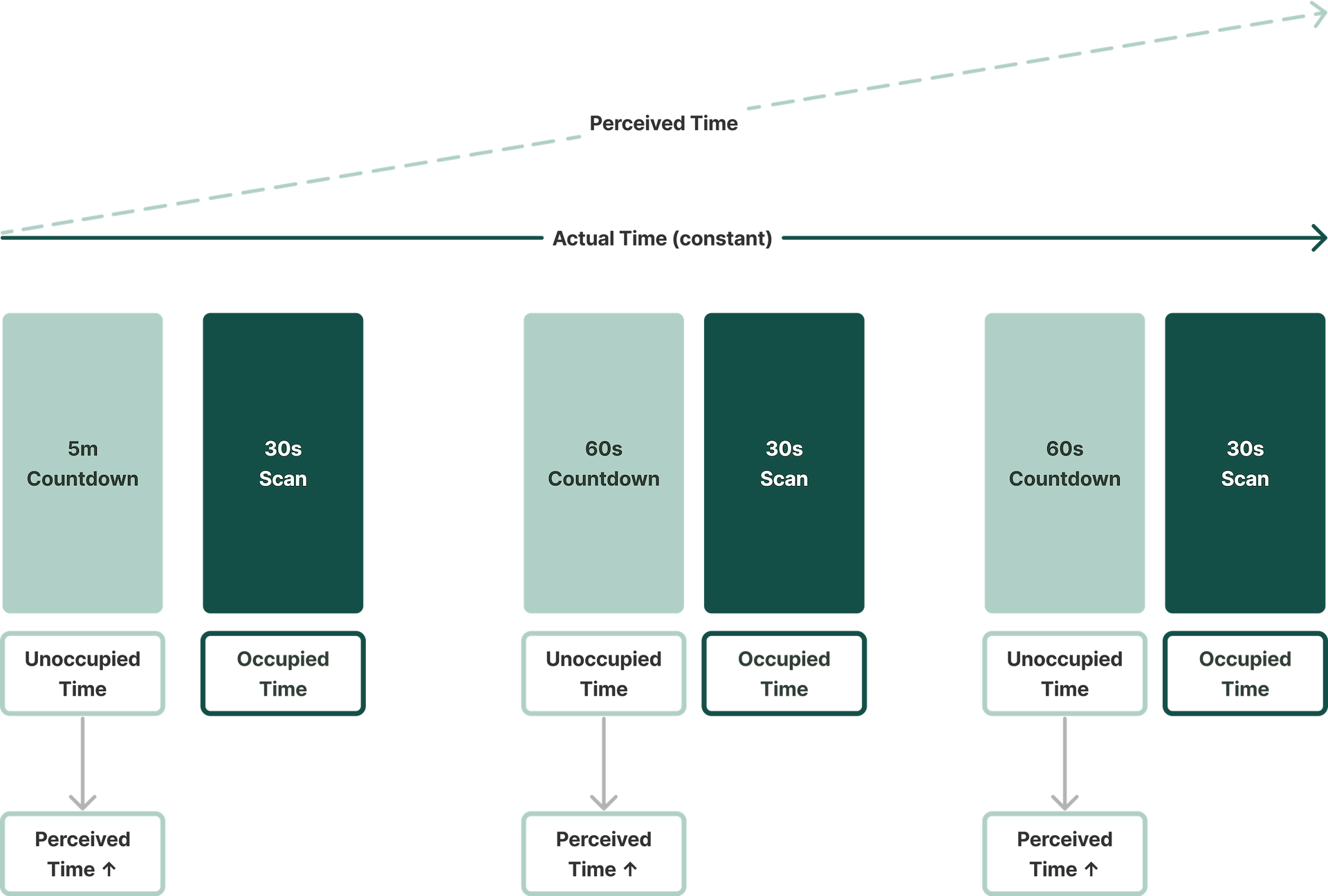

In essence, you had a 5-minute-long wait time, a 30-second engagement for the scan, and then a 1-minute wait time, and then another 30-second scan engagement.

In other words, you are slowed down, then you speed up, then you slow down again, repeating until finished. Overall, the perceived time with this kind of capture might feel quite long. If you had a failure at any point, you're going to immediately extend the scan time by however many seconds your failure occurred.

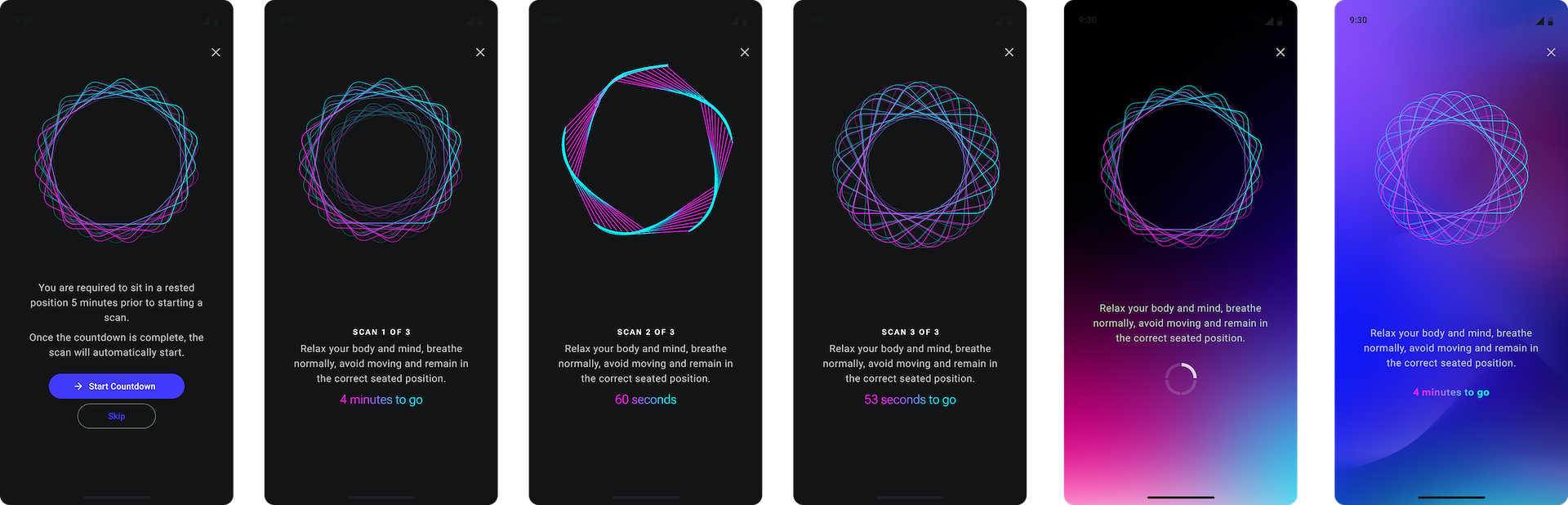

Five minutes is surprisingly difficult to keep someone still and calm. The idea I pursued was to use a meditation-type experience and display some circular moving patterns and/or gradients to keep focus and breathe normally. This is close to the meditation experience on the Apple Watch but using the phone. There are plenty of meditation-like apps on iOS as a reference, so I wasn't too concerned about finding a suitable idea. It just had to be familiar (Jakob's Law). The plan was to make this modular and display interesting facts about a partner or blood pressure information. "Did you know…" type questions could keep them entertained for a while.

Perception Management v1

Relaxing and moving pattern.

Other modules: heart facts, health related quiz, 5/1 minute video with talking.

In terms of UI, there are plenty of choices for a 5-minute countdown:

⁍ A clock with seconds (e.g., 4:34:14) is watched and is perceived to take longer

⁍ A progress bar or split progress bar

⁍ A worded message, e.g., "4 minutes to go…"

⁍ The focus should be on the animated patterns, while the countdown itself takes up a very small part of the screen. Using a worded message seemed the better choice.

Having the choice to skip the 5-minute rest stage is a hot topic for discussion. A way to look at it is that you are on borrowed time with a person.

The advantage of having a circular pattern is that the outline for the face scan appears in the same place, so there is minimal movement to continue the scan.

Split capture has its restrictions. It’s very similar to the initial Body Analysis with set countdowns and a rigid (but predictable) experience. Continuous capture employs real-time SnR and confidence scoring to capture enough quality data for a successful result.

For example, the first scan, like the BP cuff, is to be disregarded as a rule. But what if only the first 10 seconds were fluctuating and then normalized, and you ended up with a strong signal? We can keep 20 seconds of quality data.

I would also challenge the 60 seconds between scans. This is for blood pressure cuff-related reasons such as vascular recovery and residual pressure in the arteries. The CHS never touches the body and so does not suffer from any physical issues. Waiting 60 seconds can be eliminated.

So we are left with needing about 1 minute of good-quality SnR for a capture. An intuitive UI can adjust when lighting or any other factor leads to a poor SnR, so dealing with failure becomes easier. You can remove the countdown and opt in for segmented progress bars and % complete (maybe). No indication of time reduces perceived time, and with other perception management, the process would be 66% shorter.

Continuous Capture UI

These were from the research exploration and not ready for internal presentation.